Switching to an OpenTelemetry Operator-managed Collector on Kubernetes

A hands-on approach featuring the OpenTelemetry Operator and OpenTelemetry Demo on Kubernetes

When running the OTel Demo App for the first time, most folks tend to run it locally using Docker Compose. And why not? It’s a relatively low barrier to entry. But let’s face it...in the Real World™, we don’t run our production apps on our desktops with Docker Compose. Most of us probably turn to a workload orchestrator like Kubernetes or HashiCorp Nomad. Wouldn’t it be nice to see an example of the OTel Demo running on Kubernetes to get more of that Real World feel?

Luckily, the OTel Demo repo comes with Kubernetes manifests for deploying the Demo App. And if you really want to get fancy, you can even use the OTel Demo Helm chart. Given all that, you might be wondering what I’m doing here. Am I just rehashing stuff that’s already been documented? Not quite!

While the OTel Demo on Kubernetes is great as-is, it doesn’t come with a Collector managed by the OTel Operator. And if you’ve read my previous work, you know that I am a HUGE fan of the OTel Operator. So I asked myself, “What if I modified the OTel Demo on Kubernetes to run a Collector managed by the OTel Operator instead?”

But what’s the point of doing all this? Well, the Operator, among other things, is great for managing the deployment and configuration of the OTel Collector. If your were an early adopter of OpenTelemetry on Kubernetes, chances are, you’ve been deploying and managing your Collectors sans Operator, since the Operator is a newer component of OpenTelemetry. This blog post provides you a path for swapping out your “old” Collectors on Kuberntes for ones managed by the OTel Operator.

I will be demonstrating this by deploying the OpenTelemetry Demo App on Kubernetes using a Collector managed by the OTel Operator. I will also be using Dynatrace as the Observability backend (feel free to replace with your own favourite backend).

Let’s do this!

Tutorial

I’m a huge fan of Development (Dev) Containers, so I created one for running this example. While it is totally optional, I recommend it just because it gives you an environment with all of the tools you need to run the Demo App in Kubernetes.

Pre-requisites

A Kubernetes cluster v1.33+. The Dev Container comes with KinD, so you can spin up a KinD cluster, if you’d like (instructions below).

A Dynatrace account and access token. Learn how to get a trial account and generate an access token here.

For Dev Containers:

Here we go!

1- Clone the repo

Start by cloning the repo:

git clone git@github.com:avillela/otel-demo-k8s-dt.git

cd otel-demo-k8s-dt2- Build and run the Dev Container

NOTE: If Dev Containers aren’t your jam, you can go ahead and skip this step.

Next, build and run the Dev Container.

devcontainer build --no-cache

devcontainer openThe build and open steps will take a few minutes when you run them for the first time.

You only need to build the Dev Container the first time you run the Demo. After that, you just need to run devcontainer open any time you want to run the Demo. That is, unless devcontainer.json has changed, in which case you should rebuild.

3- Remove the Collector from the OTel Demo Manifest

The OTel Demo repo comes with a Kubernetes manifest, which includes the YAML definitions for deploying an OTel Collector.

I used the original file as a start, but then made the following modifications:

1) Updated namespaces. The original manifest deploys some resources to the default namespace and some to the otel-demo namespace. I’m personally not a fan of deploying resources to default, so I’ve modified the file to deploy all resources to otel-demo.

2) Removed Collector references from the Demo App’s Kubernetes manifest, since I want to deploy a Collector managed by the OTel Operator. I removed any ServiceAccount, ConfigMap, ClusterRole, ClusterRoleBinding, Service, and Deployment having metadata.name: otel-collector.

4- Create an OpenTelemetryCollector resource

Since we’re replacing the OpenTelemetry Collector (and associated components) in the OTel Demo’s Kubernetes manifest with an OTel Operator-managed Collector, we’ll need to create an OpenTelemetryCollector resource. Say wut?

The OTel Operator introduces a custom resource called OpenTelemetryCollector, which is used for managing the deployment and configuration of the OTel Collector. Here’s a snippet of what mine looks like (see full definition here):

apiVersion: opentelemetry.io/v1beta1

kind: OpenTelemetryCollector

metadata:

name: otel

namespace: otel-demo

labels:

app.kubernetes.io/name: opentelemetry-collector

app.kubernetes.io/instance: opentelemetry-demo

app.kubernetes.io/version: "0.128.0"

app.kubernetes.io/component: opentelemetry-operator

spec:

mode: statefulset

image: otel/opentelemetry-collector-contrib:0.128.0

serviceAccount: otelcontribcol

...

config:

...Let’s break things down.

1) Deployment modes

The OpenTelemetryCollector supports 4 deployment modes, which you configure via spec.mode. The supported modes are: deployment, sidecar, daemonset, or statefulset. If you leave out mode, it defaults to deployment. I’m using a statefulset, but feel free to use whichever mode best suits you.

When you specify deployment, statefulset, or daemonset mode, a Deployment, StatefulSet, and DaemonSet resource, respectively, is created, along with a corresponding Service. They are named <collector_CR_name>-collector. My OpenTelemetryCollector resource is called OTel (per spec.name), so the Operator will create a StatefulSet and a Service called otel-collector.

When you specify a sidecar deployment mode, a Collector sidecar container is created in your application’s pod, named otc-container. You also have to add an annotation to your application’s Deployment resource, under spec.template.metadata.annotations:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment-with-sidecar

spec:

replicas: 1

selector:

matchLabels:

app: my-pod-with-sidecar

template:

metadata:

labels:

app: my-pod-with-sidecar

annotations:

sidecar.opentelemetry.io/inject: "true"

instrumentation.opentelemetry.io/inject-python: "true"

spec:

containers:

- name: py-otel-server

image: otel-python-lab:0.1.0-py-otel-server

ports:

- containerPort: 8082

name: py-server-port2) Image name

If you leave out spec.image, the OTel Operator will use the latest compatible version from the opentelemetry-collector (Collector core) repo. That won’t do you much good, since it’s missing many of the exporters, processors, connectors, and receivers that we often rely on. Instead, you should specify an image from opentelemetry-collector-contrib or use your own custom Collector image. Learn more here.

3) RBAC

I created my own ServiceAccount, ClusterRole, and ClusterRoleBinding for my Operator-managed Collector, to keep them separate from the ones in the original OTel Demo manifest. I could, however, have just left the ones from the manifest. It’s really up to you.

You reference the Collector’s ServiceAccount in the OpenTelemetryCollector resource’s spec.serviceAccount config.

✨ NOTE: The Operator creates a default

ServiceAccountfor you named<collector_CR_name>-collectorif you don’t specify one, but you still have to create theClusterRoleandClusterRoleBindingfor it.

4) Collector configuration

The OpenTelemetryCollector resource includes a spec.config section for configuring the OTel Collector. If you’re not using the Operator, you need to create a ConfigMap with the YAML config for the Collector, like the one in the original Kubernetes manifest for the OTel Demo. I took the Collector config from ConfigMap included in the OTel Demo YAML, and ported it over to the OpenTelemetryCollector resource’s spec.config with some modifications. First, I changed my backend to Dynatrace (more on that in the next section), and I updated the configuration for Collector self-monitoring metrics. Learn more about Collector self-monitoring configuration here.

You can see the full Collector config here.

✨ NOTE: For more on the OTel Operator, check out the talk that I did with Reese Lee on troubleshooting the OTel Operator. You can also check out my collection of OTel Operator articles.

5- Set up the Collector exporter

In order to export the OpenTelemetry data to Dynatrace, we need to configure the OTLP HTTP exporter in the OpenTelemetryCollector resource as follows:

exporters:

otlphttp/dt:

endpoint: "https://${DT_ENV}/api/v2/otlp"

headers:

Authorization: "Api-Token ${DT_TOKEN}"Note that the exporter configuration references two environment variables: DT_TOKEN and DT_ENV. Both DT_TOKEN and DT_URL are mounted as environment variables from a Secret called otel-collector-secret in OpenTelemetryCollector.

env:

- name: DT_TOKEN

valueFrom:

secretKeyRef:

key: DT_TOKEN

name: otel-collector-secret

- name: DT_ENV

valueFrom:

secretKeyRef:

key: DT_ENV

name: otel-collector-secretAnd the Secret? You create it like this:

tee -a src/k8s/otel-collector-secret-dt.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: otel-collector-secret

namespace: opentelemetry

data:

DT_TOKEN: <base64-encoded-dynatrace-token>

DT_ENV: <base64-encoded-dynatrace-environment-identifier>

type: "Opaque"

EOFWhere:

DT_TOKENis your Dynatrace access token (see the pre-requisites section above)DT_ENVis your Dynatrace environment URL. It will look something like this:https://<your-dynatrace-tenant>.apps.dynatrace.com. Learn how to find your Dynatrace tenant here.

You’ll need to Base-64 encode both DT_TOKEN and DT_ENV like this:

echo <base64-encoded-dynatrace-token> | base64Or you can Base64-encode it through this website.

Check out the full OpenTelemetryCollector YAML here.

6- Install the OpenTelemetry Operator

Before deploying the OTel Demo, you must first install the OTel Operator in your Kubernetes cluster.

If you don’t have a Kubernetes cluster up and running, feel free to spin one up now. For your convenience, I have included 2 different ways for spinning up a Kubernetes cluster. Pick whichever one you like best, or feel free to create a Kubernetes cluster in your favourite cloud provider.

Option 1: Create a KinD cluster locally:

./src/scripts/00-create-kind-cluster.shOption 2: Create a GKE cluster on Google Cloud:

Make a copy of .env:

cp .env secrets.env✨ NOTE: While can use the original

.env, if you have any sensitive info that you don’t want committed to version control, you should usesecrets.env, as it is in.gitignore.

Edit the following values in the secrets.env file (you can ignore the other stuff in that file):

GCP_PROJECT_NAME=<your_gcp_project_name>

# e.g. us-east1

GCP_REGION=<your_gcp_region>

# e.g. us-east1-c

GCP_ZONE=<your_gcp_zone>

# e.g. e2-standard-8

GKE_MACHINE_TYPE=<your_gke_machine_type>Create the cluster:

./src/scripts/00-create-gke-cluster.sh secrets.envVerify that the cluster has been created:

kubectl get nodesOnce the cluster is up and running, you should get something like this:

NAME STATUS ROLES AGE VERSION

otel-demo-control-plane Ready control-plane 21s v1.33.1Now you’re ready to install the OpenTelemetry Operator. This will install cert-manager (Operator pre-requisite) and the OTel Operator:

./src/scripts/02-install-otel-operator.shMake sure that cert-manager is up and running:

kubectl get pods -n cert-managerSample output:

NAME READY STATUS RESTARTS AGE

cert-manager-7f6665fd8c-gp8vl 1/1 Running 0 9m32s

cert-manager-cainjector-666564dc88-crzr9 1/1 Running 0 9m32s

cert-manager-webhook-fd94896cd-d6s5v 1/1 Running 0 9m32sAnd then make sure that the Operator is up and running:

kubectl get pods -n opentelemetry-operator-systemSample output:

NAME READY STATUS RESTARTS AGE

opentelemetry-operator-controller-manager-7dd6b7c9c9-pxwzg 2/2 Running 0 9m11s7- Deploy the Kubernetes manifests

This will deploy the OTel Demo and the OpenTelemetryCollector resources:

./src/scripts/03-deploy-resources.shThe above script will:

Create a namespace called

otel-demoDeploy the

Secretsfile created in step 5Deploy OTel Operator resources, including the

OpenTelemetryCollectorresourceDeploy the OTel Demo manifest

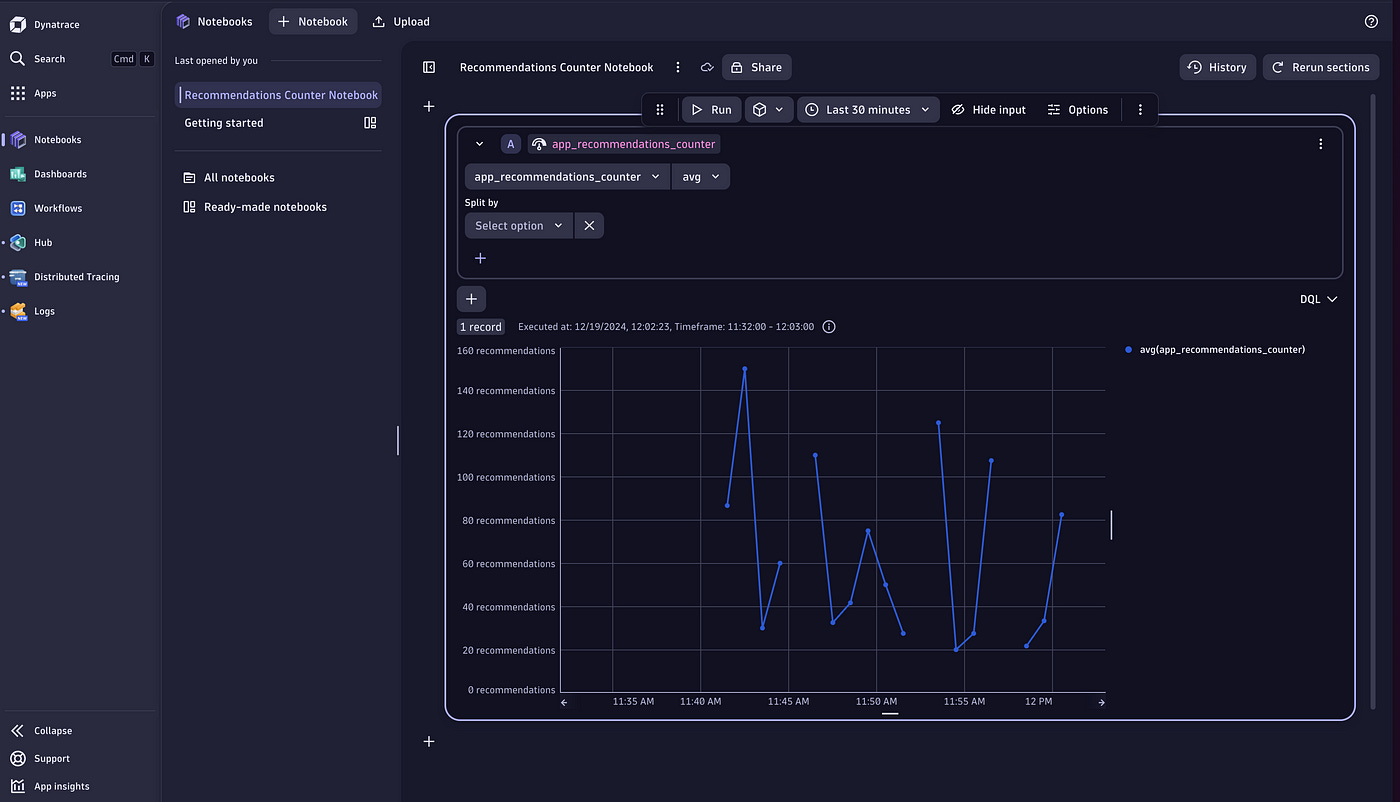

8- Explore OTel data in Dynatrace!

Once everything is deployed, you can log into Dynatrace to explore the OTel data emitted by the OTel demo.

I won’t be going in-depth on how to navigate the Dynatrace UI; however, feel free to check out my video series, Dynatrace Can Do THAT With OpenTelemetry?, which includes 6 episodes so far! 😁

Final Thoughts

Swapping out the OTel Collector in the OTel Demo manifest for a Collector managed by the OTel Operator wasn’t quite as daunting as I thought it would be. By understanding what resources are required for running the Collector in Kubernetes behind the scenes, coupled with having an understanding of the OTel Operator-managed Collector, the swap goes from scary to manageable. Hopefully having that extra bit of background is less scary for you too!

Whether you just want to learn how to use the OTel Demo with the OTel Operator on Kubernetes, or are looking to swap out your Collectors on Kubernetes for Operator-managed ones, you should now have the tools at your disposal to explore, experiment, and further your learnings!

I will now leave you with a photo of my favourite animal, the capybara. I got to hang out with this one in real life at the Cappiness Capybara Café on a recent trip to Tokyo for KubeCon Japan.

Until next time, peace, love, and code. 🖖💜👩💻